On November 5, 2001, the history of warfare changed forever. On that date, an unmanned Predator drone armed with hellfire missiles killed Mohammed Atef, a known Al-Qaida military chief and the son-in-law to Osama Bin Laden. From a purely strategic standpoint, this was significant in that it proved the utility of a new kind of weapon system. In terms of the bigger picture, it marked the start of a new kind of warfare.

If the whole of human history has taught us anything, it’s that the course of that history changes when societies find new and devastating ways to wage war. In ancient times, to wage war, you needed to invest time and resources to train skilled warriors. That limited the scope and scale of war, although some did make the most of it.

Then, firearms came along and suddenly, you didn’t need a special warrior class. You just needed to give someone a gun, teach them how to use it, and organize them so that they could shoot in a unit. That raised both the killing power and the devastating scale of war. The rise of aircraft and bombers only compounded that.

In the 20th century, warfare became so advanced and so destructive that the large-scale wars of the past just aren’t feasible anymore. With the advent of nuclear weapons, the potential dangers of such a war are so great that no spoils are worth it anymore. In the past, I’ve even noted that the devastating power of nuclear weapons have had a positive impact on the world, albeit for distressing reasons.

Now, drone warfare has added a new complication. Today, drone strikes are such a common tactic that it barely makes the news. The only time they are noteworthy is when one of those strikes incurs heavy civilian casualties. It has also sparked serious legal questions when the targets of these strikes are American citizens. While these events are both tragic and distressing, there’s no going back.

Like gunpowder before it, the genie is out of the bottle. Warfare has evolved and will never be the same. If anything, the rise of combat drones will only accelerate the pace of change with respect to warfare. Like any weapon before it, some of that change will be negative, as civilian casualties often prove. However, there also potential benefits that could change more than just warfare.

/cdn.vox-cdn.com/assets/1182417/drone-runway.jpg)

Those benefits aren’t limited to keeping keep soldiers out of combat zones. From a cost standpoint, drones are significantly cheaper. A single manned F-22 Raptor costs approximately $150 million while a single combat drone costs about $16 million. That makes drones 15 times cheaper and you don’t need to be a combat ace to fly one.

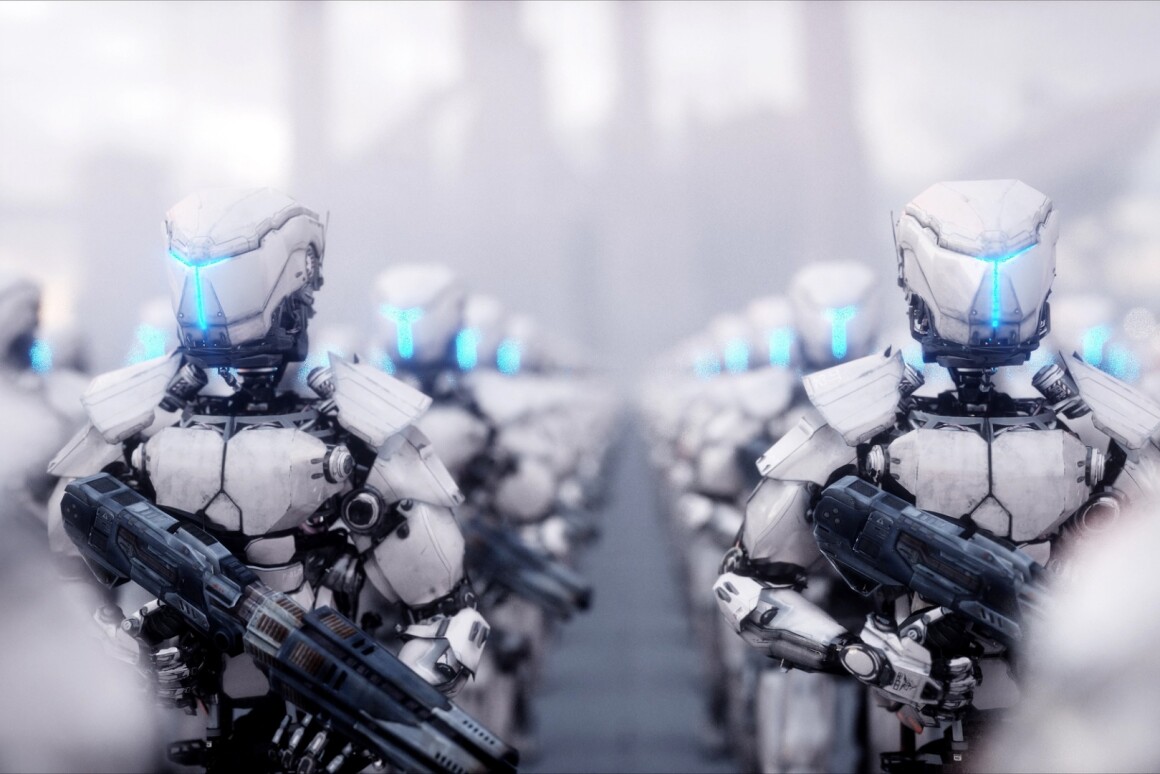

However, those are just logistical benefits. It’s the potential that drones have in conjunction with advanced artificial intelligence that could make them every bit as influential as nuclear weapons. Make no mistake. There’s plenty of danger in that potential. There always is with advanced AI. I’ve even talked about some of those risks. Anyone who has seen a single “Terminator” movie understands those risks.

/cdn.vox-cdn.com/uploads/chorus_image/image/56774869/t2_judgementday.0.jpg)

When it comes to warfare, though, risk tolerance tends to be more complicated than anything you see in the movies. The risks of AI and combat drones have already sparked concerns about killer robots in the military. As real as those risks are, there’s another side to that coin that rarely gets discussed.

Think back to any story involving a drone strike that killed civilians. There are plenty of incidents to reference. Those drones didn’t act on orders from Skynet. They were ordered by human military personnel, attempting to make tactical decision on whatever intelligence they had available at the time. The drones may have done the killing, but a human being gave the order.

To the credit of these highly trained men and women in the military, they’re still flawed humans at the end of the day. No matter how ethically they conduct themselves, they’re ability to assess, process, and judge a situation is limited. When those judgments have lives on the line, both the stakes and the burdens are immense.

Once more advanced artificial intelligence enters the picture, the dynamics for drone warfare changes considerably. This isn’t pure speculation. The United States Military has gone on record saying they’re looking for ways to integrate advanced AI into combat drones. While they stopped short of confirming they’re working on their own version of Skynet, the effort to merge AI and combat drones is underway.

In an overly-simplistic way, they basically confirmed they’re working on killer robots. They may not look like the Terminator or Ultron, but their function is similar. They’re programmed with a task and that task may or may not involve killing an enemy combatant. At some point, a combat drone is going to kill another human being purely based on AI.

That assumes it hasn’t already happened. It’s no secret that the United States Military maintains shadowy weapons programs that are often decades ahead of their time. Even if it hasn’t happened yet, it’s only a matter of time. Once an autonomous drone kills another human being, we’ll have officially entered another new era of warfare.

In this era, there are no human pilots directing combat drones from afar. There’s no human being pulling the trigger whenever a drone launches its lethal payload into a combat situation. The drones act on their own accord. They assess all the intel they have on hand, process it at speeds far beyond that of any human, and render decisions in an instant.

It sounds scary and it certainly is. Plenty of popular media, as well as respected public figures, paint a terrifying picture of killer robots killing without remorse or concern. However, those worst-case-scenarios overlook both the strategic and practical aspect of this technology.

In theory, a combat drone with sufficiently advanced artificial intelligence will be more effective than any human pilot could ever be in a military aircraft. It could fly better, carrying out maneuvers that would strain or outright kill even the most durable pilots. It could react better under stressful circumstances. It could even render better judgments that save more lives.

Imagine, for a moment, a combat drone with systems and abilities so refined that no human pilot or officer could hope to match it. This drone could fly into a war zone, analyze a situation, zero in on a target, and attack with such precision that there’s little to no collateral damage.

If it wanted to take a single person out, it could simply fire a high-powered laser that hits them right in the brain stem.

If it wants to take out someone hiding in a bunker, it could utilize a smart bullet or a rail gun that penetrates every level of shielding and impacts only a limited area.

If it wants to take out something bigger, it could coordinate with other drones to hit with traditional missiles in such a way that it had no hope of defending itself.

Granted, drones this advanced probably won’t be available on the outset. Every bit of new technology goes through a learning curve. Just look at the first firearms and combat planes for proof of that. It takes time, refinement, and incentive to make a weapons system work. Even before it’s perfected, it’ll still have an impact.

At the moment, the incentives are definitely there. Today, the general public has a very low tolerance for casualties on both sides of a conflict. The total casualties of the second Iraq War currently sit at 4,809 coalition forces and 150,000 Iraqis. While that’s only a fraction of the casualties suffered in the Vietnam War, most people still deem those losses unacceptable.

It’s no longer feasible, strategically or ethically, to just blow up an enemy and lay waste to the land around them. Neither politics nor logistics will allow it. In an era where terrorism and renegade militias pose the greatest threat, intelligence and precision matter. Human brains and muscle just won’t cut it in that environment. Combat drones, if properly refined, can do the job.

Please note that’s a big and critical if. Like nuclear weapons, this a technology that nobody in any country can afford to misuse. In the event that a combat drone AI develops into something akin to Skynet or Ultron, then the amount of death and destruction it could bring is incalculable. These systems are already designed to kill. Advanced AI will just make them better at killing than any human will ever be.

It’s a worst-case scenario, but one we’ve managed to avoid with nuclear weapons. With advanced combat drones, the benefits might be even greater than no large-scale wars on the level of Word War II. In a world where advanced combat drones keep terrorists and militias from ever becoming too big a threat, the potential benefits could be unprecedented.

Human beings have been waging bloody, brutal wars for their entire history. Nuclear weapons may have made the cost of large wars too high, but combat drones powered by AI may finally make it obsolete.

/cdn.vox-cdn.com/assets/1182417/drone-runway.jpg)

/cdn.vox-cdn.com/uploads/chorus_image/image/56774869/t2_judgementday.0.jpg)

:quality(75)/curiosity-data.s3.amazonaws.com/images/content/thumbnail/standard/ba5ffe58-3836-4c29-d079-2b3b9343a1a8.png)